Evaluating the PCORI Way: Building Our Evaluation Framework

At PCORI, one of our core values is a commitment to evidence. As noted in our Strategic Plan, “We consistently rely on the best available science, and we evaluate our work to improve its reliability and utility.” We are committed to functioning as a learning organization and strive to study and continuously improve upon all aspects of our work.

We also are committed to sharing what we learn to advance the science and practice of patient-centered outcomes research (PCOR). Even though it will sometimes require shining a light on our shortcomings, transparency is another of our core values; we hold ourselves accountable to all of our many passionate stakeholders.

In our effort to function as a learning organization, we pursue a wide range of evaluation activities. These are organized into a framework, which flows mainly from our strategic and operational plans but also, like everything we do, incorporates our stakeholders’ perspectives and priorities.

This blog post, the fourth in our series about PCORI’s evaluation activities, describes our Evaluation Framework and our first steps in building it. Each blog links to related materials, including the slides discussed at recent meetings of our PCORI Evaluation Group (PEG), seeks your input about a particular issue, and provides opportunities for you to give us your feedback.

What Is Our Evaluation Framework?

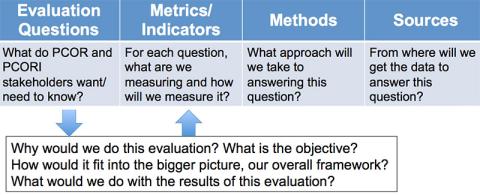

Essentially, our Evaluation Framework organizes all of the questions our stakeholders and PCORI staff have submitted about our work and outlines the questions we’ll address and how we’ll go about answering them. Our framework is not static; it will continue to evolve along with our work because even as we attempt to answer the initial questions, new ones arise.

In developing our framework, we follow the guidelines described in the PCORI Methodology Report for choosing study designs:

- Keep the question and the methodology separate

- Focus on clarifying tradeoffs

- Place individual studies in the context of a program

- Take into account advances in research methodology

This approach balances such factors as the timeliness, resource requirements, and scientific rigor of alternative approaches. The template below shows the scaffolding of the framework, and the box underneath describes how we consider each question to determine whether we will select it for evaluation.

Getting Started

With the help of our PCORI Evaluation Group (PEG) and many other contributors, we are focused on three main tasks:

- Delineating, organizing, and prioritizing the questions that people have submitted about PCORI’s work and about PCOR in general

- Determining how we can measure some of the elements that we already know will be central to answering many of the questions

- Undertaking many evaluation activities that have emerged as high priorities

The evaluation group, which meets monthly and also offers valuable assistance in one-on-one consultations between meetings, supports our work on these tasks. Group members both help us to outline the big picture (for example, to identify and prioritize questions) and to fill in the details (for example, to develop metrics for our three strategic goals).

This blog focuses on the first of these tasks—delineating, organizing, and prioritizing questions—and subsequent blogs will address the others.

Three Kinds of Questions

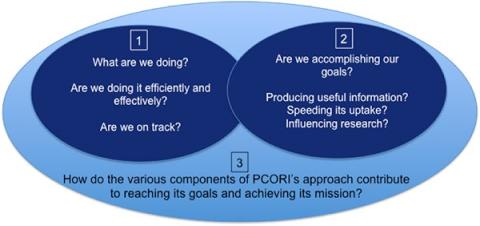

Because it encompasses all of our work and all of our stakeholders’ concerns, our Evaluation Framework can seem quite complex, but it needn’t be. We think of it as having three related sections or sets of questions, and we tackle each separately and in slightly different ways.

1. Questions about Our Day-to-Day Work

This section can contain hundreds of questions about every aspect of our work, which is not surprising given the numerous performance metrics that individuals and teams track at PCORI.

For example, we conduct a wide range of surveys of people who participate in our activities, such as applying for funding, serving on Merit Review Panels, or attending one of our Engagement events. Insight gained from these surveys has allowed us to modify and improve upon all of these processes.

The metrics we use to answer these questions are reflected on our Board of Governors’ Dashboard, which is shared with the public. These and other data we collect are regularly shared with PCORI staff as part of our ongoing effort to improve all of our processes.

2. Questions about Progress toward and Attainment of Our Goals

In this component of our framework are questions about our progress towards our three strategic goals. In our Strategic Plan, we proposed some initial ways to measure progress toward each of these goals, and we have been working with the PCORI Evaluation Group to expand and refine these metrics. In a previous blog, we described and sought feedback on an initial version of our criteria for assessing the usefulness of information. Some of our early measures of PCORI’s influence on research will show up soon on our 2014 Board of Governors’ Dashboard, which is in development. A subsequent blog will provide greater detail about this component of our Evaluation Framework.

Meaningful measures and a good understanding of what is, and is not, working to make progress toward our goals will help us answer the tough questions in the third section of our framework.

3. Questions about Our Approach: Does “Research Done Differently” Make a Difference?

This section contains many of the most critical and challenging questions. Answering them will depend to a large extent on the information we collect in addressing issues in the other sections. The questions in this section pertain to whether “the PCORI way” actually results in information that is more useful to decision makers, is used more widely and more rapidly, and has a greater impact on outcomes than traditional clinical comparative effectiveness research.

The first part of our draft Evaluation Framework is focused primarily on this section. At this stage in the framework development, we are trying to ensure that we have captured the questions that are important to our stakeholders and to frame and prioritize them appropriately. This section contains, in addition to some overarching questions, a set of questions related to each ingredient in the PCORI recipe, such as the engagement of stakeholders in our work and in the research we fund, the Methodology Standards we have developed, and PCORnet, the National Patient-Centered Clinical Research Network, which we are developing.

We invite you to dig into whichever section most interests you and tell us whether we have effectively captured the questions important to you.

Prioritizing Our Questions

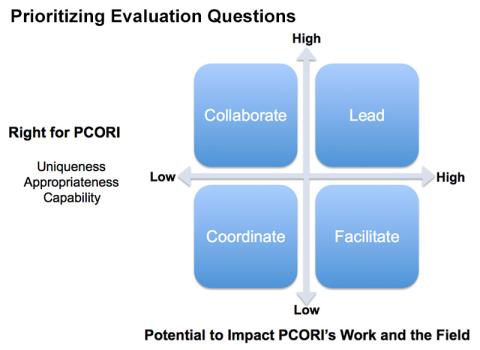

We know we may not be able to answer all of the relevant questions ourselves. We also recognize that some evaluations of our work are best done by outside groups. For example, the Government Accountability Office is charged with regularly reviewing our progress on the responsibilities outlined in our authorizing legislation.

We want to focus our efforts on those evaluations that we are best suited to carry out and have the greatest potential to improve our work and the science of PCOR. The figure below shows how we sort our questions along two dimensions to identify those on which we should take the lead in answering versus those best addressed by others.

As you review the framework to determine whether your questions are reflected in it, please let us know which questions you think are most important for us to address and which an external organization might better pursue. As we consider the questions and how to prioritize them (as discussed at the January and March meetings of the PCORI Evaluation Group), those pertaining to engagement as a path to rigorous, useful research are emerging as a top priority because they are:

- About a key aspect of “the PCORI way”

- Important to the field of PCOR generally

- Of interest to a wide range of stakeholders

- Appropriate for us to measure

- Feasible for us to measure in our funded studies

- Possible to evaluate in some aspects now, without having to wait for multi-year studies to be completed

A subsequent blog will provide more detail about our efforts to describe and measure engagement in research and develop ways of studying its impact.

Any Questions?

As we seek to understand and answer questions about PCORI and our work, our evaluation framework will continue to evolve. So, if you don’t see your questions reflected in our current materials, please submit them. We count on your input.